Flutter 压缩图像的最佳方式

引言

作为开发者的我们,经常会做一些上传图片和和保存图片啦的功能,但是由于一些图片非常大,我们在上传或者保存的时候会占用大量的网络资源和本地资源,那么我们需要做的就是对图片进行压缩。

昨天在写

最新Flutter 微信分享功能实现【Flutter专题23】mp.weixin.qq.com/s/PGpgau6mJLAbfKMVYqTuOg

的时候用到一个知识点,就是图片压缩

当时我用了flutter_image_compress

可能大家都知道Dart 已经有图片压缩库了。为什么要使用原生?

还不是因为他的效率问题,

所以今天就和大家来说一说它的具体用法吧。

1.flutter_image_compress

安装

dependencies:

flutter_image_compress: ^1.0.0-nullsafety

使用的地方导入

import 'package:flutter_image_compress/flutter_image_compress.dart';

/// 图片压缩 File -> Uint8List

Future<Uint8List> testCompressFile(File file) async {

var result = await FlutterImageCompress.compressWithFile(

file.absolute.path,

minWidth: 2300,

minHeight: 1500,

quality: 94,

rotate: 90,

);

print(file.lengthSync());

print(result.length);

return result;

}

/// 图片压缩 File -> File

Future<File> testCompressAndGetFile(File file, String targetPath) async {

var result = await FlutterImageCompress.compressAndGetFile(

file.absolute.path, targetPath,

quality: 88,

rotate: 180,

);

print(file.lengthSync());

print(result.lengthSync());

return result;

}

/// 图片压缩 Asset -> Uint8List

Future<Uint8List> testCompressAsset(String assetName) async {

var list = await FlutterImageCompress.compressAssetImage(

assetName,

minHeight: 1920,

minWidth: 1080,

quality: 96,

rotate: 180,

);

return list;

}

/// 图片压缩 Uint8List -> Uint8List

Future<Uint8List> testComporessList(Uint8List list) async {

var result = await FlutterImageCompress.compressWithList(

list,

minHeight: 1920,

minWidth: 1080,

quality: 96,

rotate: 135,

);

print(list.length);

print(result.length);

return result;

}

还有另外两种方式

2.使用 image_picker 包的 imageQuality 参数

3.使用 flutter_native_image 包

安装

flutter_native_image: ^0.0.6

文档地址

https://pub.flutter-io.cn/packages/flutter_native_image

用法

Future<File> compressFile(File file) async{

File compressedFile = await FlutterNativeImage.compressImage(file.path,

quality: 5,);

return compressedFile;

}

关于如何计算所选文件的图像大小的吗?

您可以以字节为单位获取文件长度,并以千字节或兆字节等计算。

像这样:file.readAsBytesSync().lengthInBytes -> 文件大小以字节为单位的文件大小

(file.readAsBytesSync().lengthInBytes) / 1024 -> 文件大小以千字节为单位的文件大小

(file.readAsBytesSync().lengthInBytes) / 1024 / 1024 -> 文件大小以兆字节为单位

总结

今天的文章介绍了图片压缩的三种用法,分别对应三个不同的库,大家可以去实践,来对比一下那个库的性能更好。

好的,我是坚果,

如何在 Flutter 中创建自定义图标【Flutter专题22】mp.weixin.qq.com/s/1h19t1EAaGTmrFI8gaDLWA

有更多精彩内容,期待你的发现.

Flutter项目高德地图后台持续定位功能的实现(iOS)

首先高德本身就支持后台持续定位:实例文档.对于Flutter项目高德也提供了框架支持:文档

pubspec.yaml如下:

dependencies:

flutter:

sdk: flutter

# 权限相关

permission_handler: ^5.1.0+2

# 定位功能

amap_location_fluttify: ^0.20.0

实现逻辑我们以iOS项目为例:

iOS项目工程(ios/Runner)配置:

添加定位权限申请配置

<key>NSLocationAlwaysAndWhenInUseUsageDescription</key>

<string>申请Always权限以便应用在前台和后台(suspend 或 terminated)都可以获取到更新的位置数据</string>

<key>NSLocationAlwaysUsageDescription</key>

<string>需要您的同意才能始终访问位置</string>

<key>NSLocationWhenInUseUsageDescription</key>

<string>需要您的同意,才能在使用期间访问位置</string>

以上权限会根据iOS 系统版本的不同有所不同

后台任务(Background Modes)模式配置

<key>UIBackgroundModes</key>

<array>

<string>location</string>

<string>remote-notification</string>

</array>

选择Location updates选项

Flutter项目实例

对于Flutter中的使用方法,具体实例如下:

- 首先要在main函数中进行高德地图组件的注册

- 视图中在调用定位之前必须进行权限申请

- 开启后台任务功能

- 执行持续定位

代码 main.dart:

import 'package:amap_location_fluttify/amap_location_fluttify.dart';

void main() {

runApp(const MyApp());

# 注册高德地图组件

AmapLocation.instance.init(iosKey: 'xxxxxx');

}

class MyApp extends StatelessWidget {

const MyApp({Key? key}) : super(key: key);

// This widget is the root of your application.

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'Flutter Demo',

theme: ThemeData(

primarySwatch: Colors.blue,

),

home: LocationPage(),

);

}

}

location_page.dart:

import 'package:amap_location_fluttify/amap_location_fluttify.dart';

import 'package:flutter/material.dart';

import 'dart:async';

import 'package:permission_handler/permission_handler.dart';

class LocationPage extends StatefulWidget {

LocationPage({Key? key}) : super(key: key);

_LocationPageState createState() => _LocationPageState();

}

class _LocationPageState extends State<LocationPage> {

//获取数据

// Map<String, Object> _locationResult;

String _latitude = ""; //纬度

String _longitude = ""; //经度

@override

void initState() {

super.initState();

/// 动态申请定位权限

requestPermission();

}

@override

void dispose() {

super.dispose();

}

/// 动态申请定位权限

void requestPermission() async {

// 申请权限

bool hasLocationPermission = await requestLocationPermission();

if (hasLocationPermission) {

print("定位权限申请通过");

} else {

print("定位权限申请不通过");

}

}

/// 申请定位权限 授予定位权限返回true, 否则返回false

Future<bool> requestLocationPermission() async {

//获取当前的权限

var status = await Permission.locationAlways.status;

if (status == PermissionStatus.granted) {

//已经授权

return true;

} else {

//未授权则发起一次申请

status = await Permission.location.request();

if (status == PermissionStatus.granted) {

return true;

} else {

return false;

}

}

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text("地理定位演示"),

),

body: Center(

child: Column(

mainAxisAlignment: MainAxisAlignment.center,

children: [

// latitude: 36.570091461155336, longitude: 109.5080830206976

//

Text("纬度:${this._latitude}"),

Text("经度:${this._longitude}"),

SizedBox(height: 20),

ElevatedButton(

child: Text('开始定位'),

onPressed: () {

this._startTheLocation();

},

),

],

),

),

);

}

Future _startTheLocation() async {

if (await Permission.location.request().isGranted) {

# 开启后台持续定位功能

await AmapLocation.instance.enableBackgroundLocation(

10,

BackgroundNotification(

contentTitle: 'contentTitle',

channelId: 'channelId',

contentText: 'contentText',

channelName: 'channelName',

),

);

# 监听持续定位

AmapLocation.instance.listenLocation().listen((location) {

setState(() {

_latitude = location.latLng.latitude.toString();

_longitude = location.latLng.longitude.toString();

print("监听定位: {$_latitude, $_longitude}");

});

});

} else {

openAppSettings();

}

}

}

总结

关于后台持续定位对于高德来说核心函数只有2个:

开启后台任务

AmapLocation.instance.enableBackgroundLocation(id, notification)

执行持续定位:

AmapLocation.instance.listenLocation().listen((location) {

// do someting

});

Flutter开发的一些知识点记录1

Error: Cannot run with sound null safety, because the following dependencies

don't support null safety:

flutter build ios --no-sound-null-safety

Flutter 升级到指定版本——版本升级与回退

相关命令:

查看版本: flutter --version

检查环境:flutter doctor

查看渠道:flutter channel

切换渠道(stable, beta, dev, master):flutter channel stable

升级到最新版本:flutter upgrade

升级到指定版本:flutter upgrade v2.2.3

回退到指定版本:flutter downgrade v2.0.3

也可通过git回退版本:

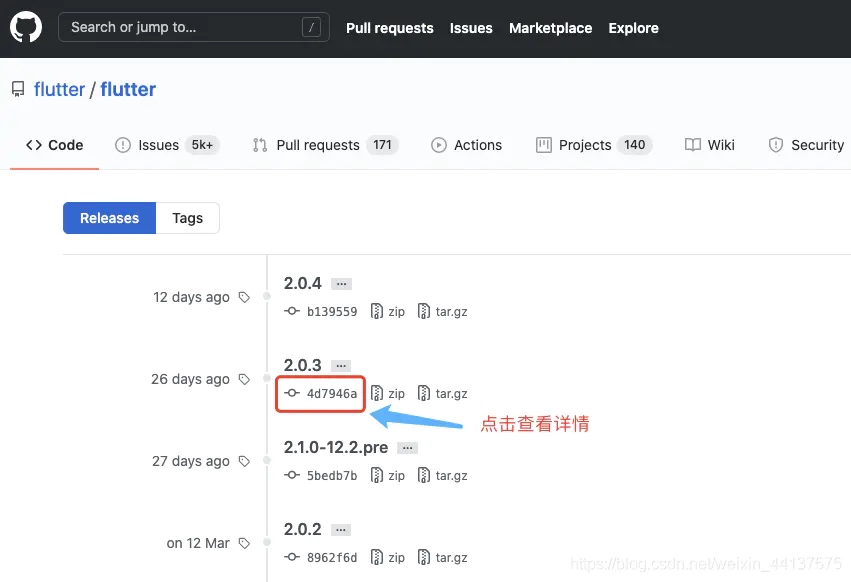

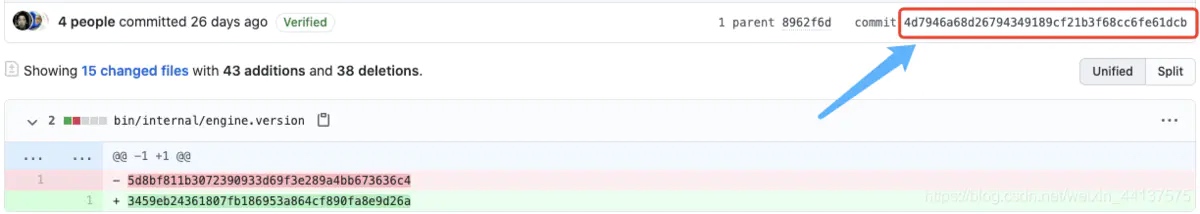

- 进入 flutter github 找到要回退的版本

- cd进入到存放flutter sdk目录,运行回退指令 git reset --hard [commit_id]

例如-> git reset --hard 4d7946a68d26794349189cf21b3f68cc6fe61dcb - 查看flutter版本

查看版本-> flutter doctor 或者 flutter --version

Copyright © 2015 Powered by MWeb, 豫ICP备09002885号-5